Automatically Create SharePoint Subfolders Using Cloud Flow and Environment Variable (Part 4 of 8)

This is the fourth post in a series of Automating SharePoint Integration with

Dataverse using Power Automate. You can check out the other posts via these

links (Part 1,

Part 2,

Part 3,

Part 5, Part 6, Part7, Part 8)

In some of my projects, there is a requirement to automatically create

subfolders when the document location is created. In that way, the users will

have a proper folder structure to upload the related documents based on the

document type (e.g. Supporting Documents, Approval Letters, etc.).

In this post, you will learn about how to automatically create the

subfolders based on the JSON value defined in the Environment Variable.

To make it generic for all tables, the cloud flow will be triggered when the

Document Location is created (which will be automatically created by the flows

as mentioned in

Part 1 and Part 3).

Before we start building the flow, we need to create the environment

variable to store the folder structure of the subfolders.

This is the sample JSON that I use for the cloud flow in this post. The

folderstructure JSON contains an array of tables with logical name, entity

set name and the name of the SharePoint library (which is mostly the display

name of the table). Each table contains the folders array that specifies the

subfolder structure. You only need to specify the lowest level folder name

and not its parent folders. E.g. if you create a SharePoint folder with

"Private/Financial Statements/Bank Statements" path, it will create all x3

folders and you don't have to create "Private" and "Private/Financial

Statements" folders individually.

{

"folderstructure": [

{

"logicalname": "contact",

"entitysetname": "contacts",

"libraryname": "Contact",

"folders": [

{

"name": "Public"

},

{

"name": "Private/Identity Documents"

},

{

"name": "Private/Proof of Address"

},

{

"name": "Private/Financial Statements/Bank Statements"

},

{

"name": "Private/Financial Statements/Credit Card Statements"

}

]

},

{

"logicalname": "lzw_application",

"entitysetname": "lzw_applications",

"libraryname": "Application",

"folders": [

{

"name": "Approval Letters"

},

{

"name": "Supporting Documents"

}

]

},

{

"logicalname": "incident",

"entitysetname": "incidents",

"libraryname": "Case",

"folders": [

{

"name": "Screenshots"

},

{

"name": "Error Logs"

}

]

}

]

}

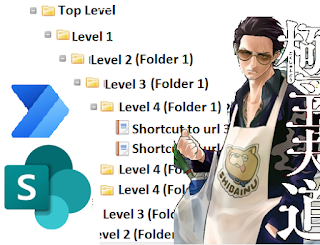

These are all the steps included in the cloud flow for this solution.

The trigger is on Create of the Document Location. As I explained in

Part 3, the document location can also be created for the parent folder inside the

related Contact or Account folders (for the folder highlighted below). In that

case, we do not want to run this flow to create subfolders. That is why the

first step in the flow is to check if the Regarding contains any data and

cancel the flow if there is no value.

parameters('SharePoint Folder Structure (lzw_SharePointFolderStructure)')?['folderstructure']

I used the expression above to filter the folderstructure property of

SharePoint Folder Structure environment variable. You can either update the

expression based on the name of your environment variable or you can

create a Compose step, populate with the environment variable and peek code

to see the expression and add "?['folderstructure']" at the end.

We will use item()?['entitysetname'] property to filter because the Dataverse trigger output only contains

the Entity Set name for the table type of Regarding lookup. Again, the

Regarding (Type) available in the Dynamic Value list is incorrect and you

will have to use the _regardingobjectid_type property in the

expression to get the entity set name of the Regarding lookup (you can read

more about it in

Alex's post).

In this step, we will initialise three variables for three parameters required

by the SharePoint Create new folder action. The

SharePoint Site Address will be initialised with empty value and

populated in the steps below. The SharePoint Library will be

populated with the libraryname property of

the folderstructure JSON from the environment variable which will be the

output of the Filter Array step.

triggerOutputs()?['body/_regardingobjectid_type']first(body('Filter_SharePoint_Folder_Structure_array'))?['libraryname']

The Folder Name can be populated with the

Relative URL from the trigger output.

This query is a bit complicated. This step is not only to get the value

for SharePoint Site Address variable but also to retrieve

the whole folder path if the current document location is created inside the

related Contact or Account folder as mentioned in

Part 3.

parentsiteorlocation_sharepointsite($select=absoluteurl),parentsiteorlocation_sharepointdocumentlocation($select=relativeurl;$expand=parentsiteorlocation_sharepointdocumentlocation($select=relativeurl;$expand=parentsiteorlocation_sharepointsite($select=absoluteurl)))

You can copy the Expand Query above to use in your flow. The following is

the FetchXML equivalent of the Expand Query. The main objective of the query

is to retrieve all necessary data in a single query. With this query, we can

retrieve the absoluteurl of the SharePoint site (for

Scenario 1) if the current document location is directly under the

SharePoint document library (e.g. https://sharepointsite/sites/contact/Jim

Glynn). The query will also return the relativeurl of the

related parent locations (for Scenario 2) if the current document location

is created inside the related Contact or Account folder (e.g.

https://sharepointsite/sites/contact/Jim Glynn/incident/Test Case).

<fetch>

<entity name="sharepointdocumentlocation">

<attribute name="relativeurl" />

<filter>

<condition attribute="sharepointdocumentlocationid" operator="eq" value="00000000-0000-0000-0000-000000000000" />

</filter>

<link-entity name="sharepointsite" from="sharepointsiteid" to="parentsiteorlocation" link-type="outer">

<attribute name="absoluteurl" />

</link-entity>

<link-entity name="sharepointdocumentlocation" from="sharepointdocumentlocationid" to="parentsiteorlocation" link-type="outer">

<attribute name="relativeurl" />

<link-entity name="sharepointdocumentlocation" from="sharepointdocumentlocationid" to="parentsiteorlocation" link-type="outer">

<attribute name="relativeurl" />

<link-entity name="sharepointsite" from="sharepointsiteid" to="parentsiteorlocation" link-type="outer">

<attribute name="absoluteurl" />

</link-entity>

</link-entity>

</link-entity>

</entity>

</fetch>

If the current document location is directly under the SharePoint document

library (Scenario 1), the value for the following expression will contain

a value. In that case, we can populate the SharePoint Site Address variable with that value.

outputs('Get_Document_Location_by_ID')?['body/parentsiteorlocation_sharepointsite/absoluteurl']

If the current document location is created inside the related Contact or

Account folder (Scenario 2), the expression for the SharePoint Site Address variable will be as follows. (which is under x2 additional

parentsiteorlocation_sharepointdocumentlocation)

outputs('Get_Document_Location_by_ID')?['body/parentsiteorlocation_sharepointdocumentlocation/parentsiteorlocation_sharepointdocumentlocation/parentsiteorlocation_sharepointsite/absoluteurl']

For Scenario 2, the SharePoint Library will not be

the libraryname property of the current table. It will be

the library name of the parent Contact or Account. That is the reason why

another Filter Array step is required to filter by the logical name using

the relativeurl of great grandparent document location.

outputs('Get_Document_Location_by_ID')?['body/parentsiteorlocation_sharepointdocumentlocation/parentsiteorlocation_sharepointdocumentlocation/relativeurl']

Then, use the following expression to set

the SharePoint Library variable.

first(body('Filter_SharePoint_Folder_Structure_array_for_SharePoint_Library'))?['libraryname']

The Folder Name is also not just Relative URL from the trigger output and it should also be combined with

relativeurl from parent document location grandparent document

location to build the whole folder path.

@{outputs('Get_Document_Location_by_ID')?['body/parentsiteorlocation_sharepointdocumentlocation/relativeurl']}/@{outputs('Get_Document_Location_by_ID')?['body/relativeurl']}/@{triggerOutputs()?['body/relativeurl']}

The final step is to loop through the folders array of the

JSON with the following expression and create a folder for each folder name

using

Create New Folder action

from SharePoint connector. The Site Address, Library and parent folder paths

can be populated with the variables from the steps above.

first(body('Filter_SharePoint_Folder_Structure_array'))?['folders']

Summary

Automatically creating the subfolders under the SharePoint document

folder for the tables can be achieved with Power Automate cloud flow by

storing the folder structure in the environment variable and retrieving

the folder path from the related document locations.

First of all, thank you for documenting all of this, it is incredibly helpful to see this. I'd be curious to know if you would ever consider swapping out folders for a choice column and using it for Metadata instead. Are you using folders for the permissions?

ReplyDeleteWe are not using folders for the permission; just for the categorization.

DeleteI did suggest that option to add metadata column to the client as per this blog post.

https://linnzawwin.blogspot.com/2020/06/how-to-add-metadata-to-sharepoint.html

However, the client prefers the folder structure with nested folders (partially due to the number of files and there could be multiple pages). And with just OOB functionality, updating the metadata of the file needs to be done by opening the document location in SharePoint (and the client prefers not to put more development effort).

Hello Linn, thank you so much for sharing those amazing series. I have a question lets say I have in a SharePoint library combination of files and folders around 200k and I want to continuously upload files/folders and assign permissions to users. Will anything stop me from performing any operations? Like assigning permissions, since I know it is not recommended to store more than 30K files in a single library.

ReplyDeleteSorry, Julien. I am not that knowledgeable in SharePoint so that I can't really answer if there is any limitation for having more than 30k files/folders in a single library.

DeleteHi Linn, I am facing this SharePoint error "No Active Document Location found" and I can actually add the location manually but I have to do that every time for each record! I know there must be a way to configure two "master" Document Locations for two document tabs in my model-driven app. However, I don't know how. Can you show me the way please? We can connect via teams or zoom (fingers crossed).

ReplyDeleteI am not too sure about your requirement. Why do you need to manually create the folder? Doesn't the out-of-the-box SharePoint integration auto create the folder when you click on the Documents tab?

DeleteOr are you trying to auto create the SharePoint folder? If so, check out the Part 1 blog post.

https://linnzawwin.blogspot.com/2021/08/automatically-create-sharepoint.html

I am not aware of any way to configure two "master" Document Locations for two document tabs. Are you referring to the custom Documents tab with a subgrid?

You can message me in LinkedIn if you want to send screenshots of your issue.