Retrieve more than 100,000 Dataverse Rows with List Records Action in Cloud Flow Using Skip Token

Have you ever wondered what the Skip Token parameter in the

List Records

action of Microsoft Dataverse connector

is for? In this post, I will explain how you can retrieve any number of rows, even more than 100,000 rows, by handling paging manually with the

Skip Token parameter.

By default, the List Records action in Power Automate can retrieve up to 5,000 rows only from the Microsoft Dataverse environment. If you want to retrieve more than

5k rows, you can enable Pagination in the Settings and set

the Threshold setting with a value up to 100,000

as mentioned in David Yack's

blog post. 100k is quite a lot of rows and in most cloud flows, it is hard to hit that maximum limit. The best approach is to

use the Filter Query

to filter out to the point that the data is manageable. But there are some

exceptional cases where you might have to retrieve more than 100k rows (e.g.

export the data out of the system as a file which needs to be imported into

another system).

There are also some caveats to enabling the Pagination in Settings and increasing the Threshold setting to

retrieve more than the default 5k rows.

- The data of the 5001st row onwards of the output does not contain the value for @OData.Community.Display.V1.FormattedValue

- @odata.nextLink property returns empty which means you cannot use the Skip Token parameter to retrieve the data with a batch of 100k rows

To overcome those problems or retrieve more than 100k rows, we can use

the Skip Token parameter as below. Thanks to Nuri Usta

for blogging about it

one of his blog posts.

There are two ways we can do the retrieving:

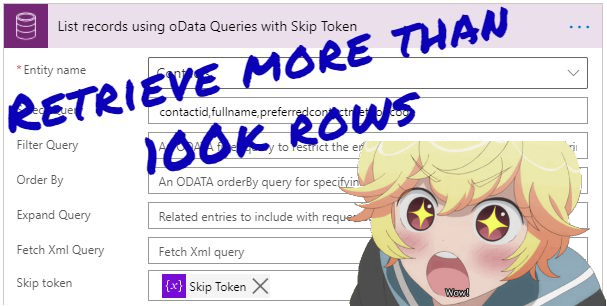

🔗 List Records using oData Queries with Skip Token Parameter

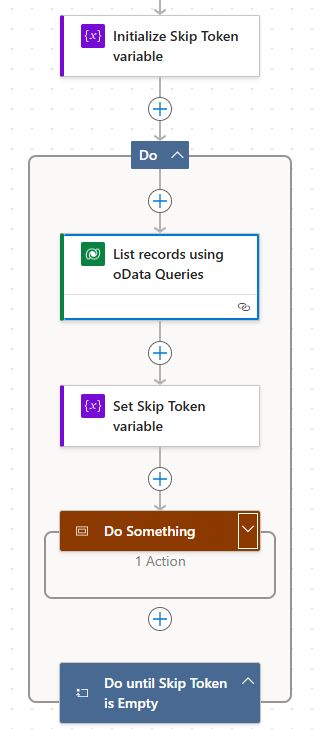

1. Initialise Skip Token Variable

First, initialise the Skip Token string variable with an empty value.2. Do Until Skip Token is Empty

Then, add a Do Until loop and inside the loop, retrieve the data with a List Rows action. If there are more than 5k rows, it will return the @odata.nextLink in the output to fetch the next page data. Populate the Skip Token parameter with the Skip Token variable which will be an empty string for the first loop. Make sure the Pagination is disabled in the List Records step setting or else, @odata.nextLink will be empty.

After that, set the Skip Token variable with the @odata.nextLink value from the List Rows action using the following expression.

if

(

empty

(

outputs('List_records_using_oData_Queries')?['body/@odata.nextLink']

),

'',

decodeUriComponent

(

last

(

split

(

uriQuery(outputs('List_records_using_oData_Queries')?['body/@odata.nextLink']),

'skiptoken='

)

)

)

)

What the expression does is get the annotation value of @odata.nextLink, split it to take the value after skiptoken query parameter and decode the URI component of it. If the @odata.nextLink is empty (when the total result set is less than 5k rows), the expression will return the empty string.

Then, process the data from the query by adding anything in the Do Something scope.

The loop will continue until all data is processed after the last 'List Rows' step, and the @odata.nextLink is empty.

Unlike using oData queries, using FetchXML queries in List Records action will only return a maximum of 5,000 rows regardless of the pagination threshold set as Thanura Wijesiriwardena mentioned in his blog post. However, paging with FetchXML is easier and you can do so by setting the page and count attributes of the fetch element.

1. Simple Paging using FetchXML

First, you need to initialise the Page Number integer variable and set as 1 for the first query. In the Do Until loop, retrieve the data using the List Records action with Fetch Xml Query parameter which has the page attribute with the variable value in the fetch element. Don't forget to change the default limit of the Until iteration if your result set is more than 300k rows. After List Records step, process the retrieved data before moving into the loop.

If the List Records step returns any data, increment the Page Number variable to loop again and retrieve the next page. If the List Records step no longer returns the data, set the Page Number variable with 0 to exit the loop.

2. Page Large Result Sets using FetchXML with Paging Cookie

When you query for the large result set, it is recommended to use the paging cookie which makes paging faster. In my experience, query using the paging cookie is improved but not so drastically. For 460k rows, it saved 18 seconds (from 3:19 to 3:01) by using the paging cookie. Debajit Dutta has blogged about how to extract the paging cookie from the output of the List Records action by manipulating the @Microsoft.Dynamics.CRM.fetchxmlpagingcookie annotation in his blog post.

The flow would be similar to the previous one but one more Paging Cookie string variable is required. In this flow, you don't have to reset the page number to exit the Do Until loop because you can use Paging Cookie which will be empty when there is no more data. The flow will loop until the Page Number variable NOT equal to 1 and the Paging Cookie variable is empty. The List Records step is also the same except the fetch element contains the page-cookie attribute with the Paging Cookie variable. After processing the retrieved data, increment the Page Number variable and set the Paging Cookie variable.You can find step by step details about extracting the paging cookie in Debajit's blog post, but in my flow, I extracted the paging cookie with a single expression to save some API calls.

if

(

empty(outputs('List_records_using_FetchXML_Initial_Query')?['body']?['@Microsoft.Dynamics.CRM.fetchxmlpagingcookie']),

'',

replace

(

replace

(

replace

(

decodeUriComponent

(

decodeUriComponent

(

first(split(last(split(outputs('List_records_using_FetchXML_Initial_Query')?['body']?['@Microsoft.Dynamics.CRM.fetchxmlpagingcookie'], 'pagingcookie="')), '" '))

)

),

'<', '<'

),

'>', '>'

),

'"','"'

)

)

The value of @Microsoft.Dynamics.CRM.fetchxmlpagingcookie annotation looks something like this and we need the value of the pagingcookie as highlighted below.

<cookie pagenumber="2" pagingcookie="%253ccookie%2520page%253d%252291%2522%253e%253ccontactid%2520last%253d%2522%257b21883F50-3084-E911-A850-000D3AE02BC5%257d%2522%2520first%253d%2522%257bB5C46C64-2C84-E911-A850-000D3AE02BC5%257d%2522%2520%252f%253e%253c%252fcookie%253e" istracking="False" />

To do so, we can use first(split(last(split(<<String>>, 'pagingcookie="')), '" ')) expression to get the value between the two text. Thanks to Antti Pajunen for sharing that tip in his session at Automate Saturday.

%253ccookie%2520page%253d%252291%2522%253e%253ccontactid%2520last%253d%2522%257b21883F50-3084-E911-A850-000D3AE02BC5%257d%2522%2520first%253d%2522%257bB5C46C64-2C84-E911-A850-000D3AE02BC5%257d%2522%2520%252f%253e%253c%252fcookie%253e

The value is double URL encoded, so x2 decodeUriComponent functions are required to get this value.

<cookie page="91"><contactid last="{21883F50-3084-E911-A850-000D3AE02BC5}" first="{B5C46C64-2C84-E911-A850-000D3AE02BC5}" /></cookie>

These special characters < > ' in FetchXML going to cause Invalid XML erorr, so replace those with HTML character reference (because there is no htmlEncode function available for Power Automate expressions).

<cookie page="91"><contactid last="{21883F50-3084-E911-A850-000D3AE02BC5}" first="{B5C46C64-2C84-E911-A850-000D3AE02BC5}" /></cookie>

Finally, add empty() check to avoid null exception.

Summary

By using part of the @odata.nextLink as Skip Token parameter in List Records using oData queries, you can retrieve any number of rows even more than the 100k limit.

For List Records using FetchXML queries, you can paginate with 5k rows per page by setting the page attribute of the fetch element in FetchXML. But to improve the performance when querying large volume of data, you may need to extract the value for page-cookie attribute from @Microsoft.Dynamics.CRM.fetchxmlpagingcookie annotation.

This is good article!

ReplyDeleteNYC article

ReplyDeletewonderful!!!

ReplyDeleteI lost one day trying to place pencil signature in a word doc. Without these tips I will lost much more time. Thank you so much!

ReplyDeleteVery good article! When the fetch returns zero results this flow configuration ended up in an infinite loop.

ReplyDeleteHi Andreas,

DeleteThanks for your comment. Which flow configuration are you referring to?

For 1. Simple Paging using FetchXML, Page Number variable will become 0 and exit the loop if the fetch returns zero results.

For 2. Page Large Result Sets using FetchXML with Paging Cookie, the Paging Cookie variable will be empty and exit the loop if the fetch returns zero results.

Thanks a lot, you given me solution for error: Malformed XML.

ReplyDeleteWhere is the solution of malformed xml im getting it too

Deletegreat article. Quick question. Everything works perfectly with paging cookies in fetch XML except for the do until expression. It seems to always run two loops and then stop even though I know there's more things to loop through.

ReplyDeleteFor FetchXML, increment the Page Number variable to loop again and retrieve the next page if the List Records step returns any data. If the List Records step no longer returns the data, set the Page Number variable with 0 to exit the loop.

DeleteIf it just runs two loops can you please check if the 2nd loop returns any data?

Very nice article. Do you have any similar article to perform a bulk update for more than 100K records designed in a way that the flow does not take a lot of time?

ReplyDelete@Julien, you can change the Concurrency Control of the Apply to Each step and increase the Degree of Parallelism.

Deletehttps://blog.magnetismsolutions.com/blog/satyvirjasra/2019/10/22/improving-microsoft-flow-runtime-using-concurrency-control

The maximum value is 50 but setting the max would be highly likely to hit the service protection API limit (6000 within the 5 minute sliding window) and some of the updates will be failed. Just try to find the balance by gradually increasing the number.

https://docs.microsoft.com/en-us/powerapps/developer/data-platform/api-limits

The Do Until loop, where the condition has been validated as

ReplyDelete"Run the logic initially, once it is completed, it should validate the condition if Skip token is empty, If it is empty it should run again and this way it will go to infinite times to loop because logically the condition should be it should run only if Skip token has some value in it.

Actually, "Do Until loop" condition works in a way that the loop runs again if the condition is false (i.e. run it until the condition is true). What you mentioned is "While loop" which is not part of cloud flows Control steps.

DeleteIn simple words, the loop will run (Do) again and again Until the skip token is empty.

I finally had a reason to try and implement the OData version of this (I have previously played around with the paging cookie version), so wanted to try out the steps you listed for the OData version, as it feels cleaner than setting some arbitrary pagination threshold number to get around the paging issue

ReplyDeleteI understand the premise of the article is based on when you know there's more records than the initial query returns so a skip token would always be generated from the initial query, but I wanted to also use this when you don't know how many records the initial query returns (and wanted to stick to the default 5k limit for the moment)

Hence, please correct me if I'm wrong, but I'm thinking you may want to add a condition to check the skip token variable, as one may never be generated from the first initial query you perform, and the do until loop would always execute at least once as the condition is checked at the end. and would pass an invalid skip token which generates the "malformed xml" error you mentioned

You're correct, Eric. I should have added a condition between the initial query and the do until loop to skip the loop if there is no more page after the initial query.

DeleteI have updated the post with a new solution (removed the initial query) since there is no "Malformed XML" error in the List Rows action for passing empty string to the Skip Token parameter.

Do you have any idea on how to save these records in a csv file? We fetch more than 100k records, how do we put all these together in a single csv?

ReplyDeleteApologies for missing out to reply this comment. Please refer to the following video on how to save the Dataverse data to .csv file.

Deletehttps://youtu.be/626sEDwgrYM

Excellent article. The OData version allows selecting more than 100k. Is that also possible with the FetchXML version? My query is more complex than what OData allows so I need to use FetchXML for more than 700k records.

ReplyDeleteApologies for the last reply. The second section of the blog post describes querying "Large Result Sets using FetchXML with Paging Cookie"

Deletehttps://linnzawwin.blogspot.com/2021/01/retrieve-more-than-100000-dataverse.html#fetchxmlpagingtoken

Unfortunately the skip token method is not working for me. I get malformed XML error if I use 'skiptoken=' as the split delineator, because the '=' hasn't been decoded yet. So I changed that to 'skiptoken%3D' and got just a tad further but still to no avail. DecodeURIComponent is only decoding one slash in the URI query parameter... output looks like the following. I've tried running that string through another decodeURIComponent but that isn't working:

ReplyDelete%3Ccookie%20pagenumber=%222%22%20pagingcookie=%22%253ccookie%2520page%253d%25221%2522%253e%253copportunityid%2520last%253d%2522%257b8B93C184-042C-4604-9625-3764CDDB447E%257d%2522%2520first%253d%2522%257bC9AEA6C2-2C85-4BA9-943D-0004310B3605%257d%2522%2520%252f%253e%253c%252fcookie%253e%22%20istracking=%22False%22%20/%3E

In addition to what you wrote, I would add that if someone wanted to use link-entity (to fetch records from a related table using a lookup column), none of these methods would work... It doesn't work because link-entity causes hidden sorting by values/ids within the related entity (the page you linked to "use the paging cookie which makes paging faster" even states this but doesn't provide a reason for it "Some queries do not support paging cookies. When paging cookies aren't supported by a query, no paging cookie value is returned with the result. For example, queries sorted using a link-entity attribute may not support paging cookies.

ReplyDeleteWhen Dataverse doesn't return a paging cookie, the paging model falls back to simple paging, with all the limitations that come with it.").

To get around this and make it work, in that case you need to force sorting within the main entity, like in this example:

In addition to what you wrote, I would add that if someone wanted to use link-entity (to fetch records from a related table using a lookup column), none of these methods would work... It doesn't work because link-entity causes hidden sorting by values/ids within the related entity (the page you linked to "use the paging cookie which makes paging faster" even states this but doesn't provide a reason for it "Some queries do not support paging cookies. When paging cookies aren't supported by a query, no paging cookie value is returned with the result. For example, queries sorted using a link-entity attribute may not support paging cookies.

ReplyDeleteWhen Dataverse doesn't return a paging cookie, the paging model falls back to simple paging, with all the limitations that come with it.").

To get around this and make it work, in that case you need to force sorting within the main entity, like in this example:

//

Delete//

//

//

//

//

//

//

//

//

//

//

//

//

//

//

//

//

For some reason Im not able to paste exaple xml :(

Delete-->

DeleteVery useful! I'm trying with the oData method. What if I'm having a buffer overflow issue? I'm trying to do a backup of a table, and I need all the columns. When doing the list I hit the buffer size limit before hitting the 5000 rows limit. I tried limiting the Row count to something smaller, but doing that nukes the Skip Token (it comes empty!). What can I do in this case?

ReplyDeleteVery helpful, used first approach, before this i wasted 4-5 hours to do this

ReplyDelete